Nvidia isn’t the only AI chip supplier in town.

Early signals in hiring and partnerships show the compute mix starting to split. Lead times, cost, and concentration risk are pushing teams to add a second path. Availability of compute remains tight, and this is driving capacity to widen beyond a single vendor.

Anthropic is an early test case. The company is helping to develop Amazon’s Trainium AI chips, including plans to use these chips to train its next Claude model, and is expanding its use of Google’s TPU chips. These moves add capacity beyond Nvidia and lessens its reliance on Nvidia hardware.

Using CB Insights customer sentiment interviews, hiring insights, investments, and partnership activity, we analyze:

- Why Nvidia dominated and what’s different now

- Anthropic as an early example of diversification

- Beyond Anthropic, signals of a multi‑chip market

Why Nvidia dominated and what’s different now

Nvidia’s historic dominance in the AI chip space stems from its software and hardware ecosystem, and while that foundation remains strong, alternatives have improved enough to enable hedging.

CUDA — Nvidia’s software platform and programming model for running AI on its GPUs — and its supporting toolchain compress time to adopt and reduce delivery risk. For most teams, swapping to another supplier means rewriting code, retraining people, and revalidating models, a tax few companies can absorb, especially when developing new AI solutions.

CB Insights customer sentiment interviews echo this lock-in. For example, one founder at a software engineering company mentioned “since all of our infrastructure is built on CUDA … the main challenge in switching … is the infrastructure and software ecosystems. The learning cost … is unknown, and that uncertainty is a barrier.”

And because CUDA software requires specialized knowledge, this lock-in shows up in AI company hiring data. For example, Baseten is hiring a GPU Kernel Engineer to implement custom CUDA kernels across current‑gen Nvidia GPUs. That is a direct signal of Nvidia‑specific optimization work that raises near‑term switching costs.

But parts of this story are changing. AMD’s software has materially improved since late 2024. Issues that once blocked deployments are less frequent, and fixes land faster. Parity is not universal by workload, but the friction gap is narrowing enough to allow some teams to start diversifying.

Anthropic as an early example of diversification

Against this backdrop, Anthropic is the early test of real diversification.

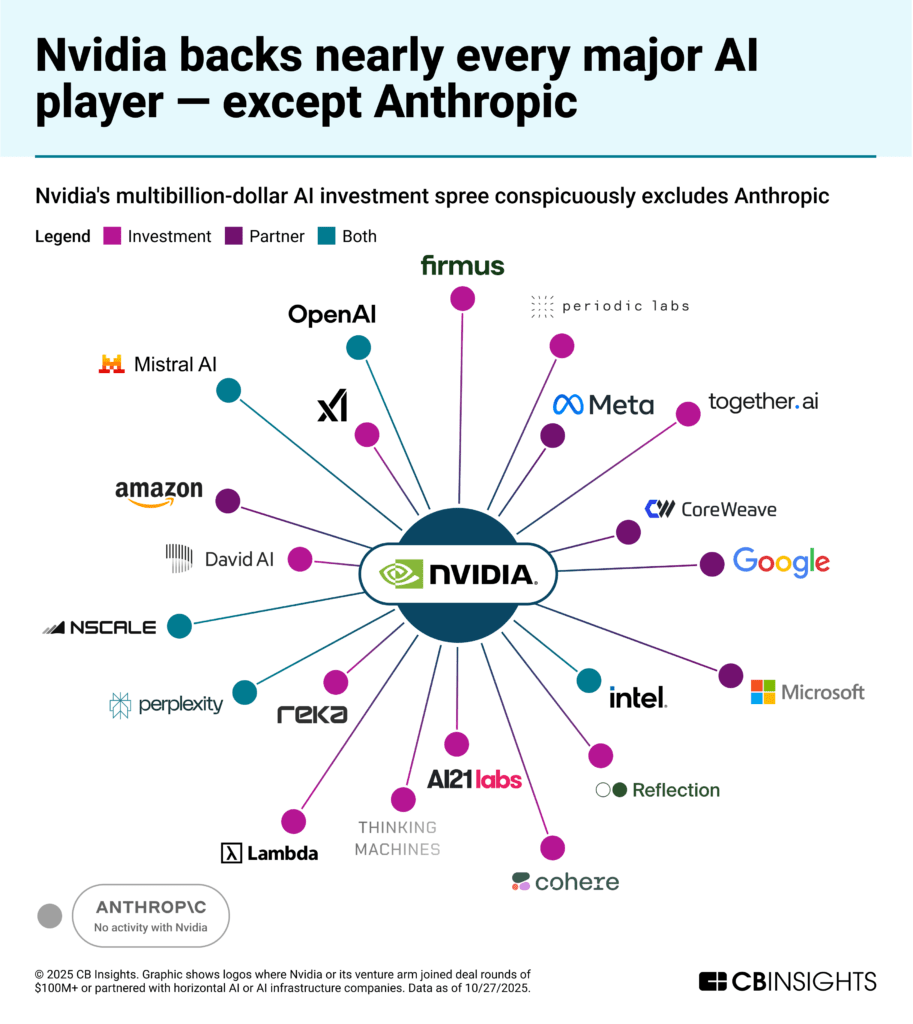

Anthropic is the notable exception to Nvidia’s investments across leading AI companies. The two have recently clashed over export controls, signaling a strained relationship between Nvidia and Anthropic that continues to this day.

The company’s relationship with Amazon further signals a path away from a sole reliance on Nvidia. This relationship began in September 2023, when Amazon announced a $4B investment in Anthropic and, in turn, the company named AWS its primary cloud provider. This meant distribution of Anthropic’s Claude models through AWS’s Bedrock service, as well as access to the Trainium AI chips that Amazon has been developing since 2020.

Since then, the two appear to be working in tandem to diversify away from Nvidia chips. AWS, for instance, recently said more than half of its Bedrock service now runs on its custom Trainium AI chips. In addition, Anthropic has recently committed to training the next generation Claude model on Trainium chips through Amazon’s Project Rainier data center deployment.

And Anthropic isn’t just relying on Amazon to help it move away from Nvidia chips.

In October 2025, Anthropic announced it will expand its use of Google TPUs — Google’s specialized AI chips — to up to one million units. Until then Google kept most TPU capacity for internal use; opening it at Anthropic scale signals both Anthropic’s push to add non‑Nvidia supply and Google’s bet that more buyers want alternatives. That shift on both sides sets up the broader signals of a multi‑chip market.

Beyond Anthropic, signals of a multi‑chip market

As software improves and compute remains hard to source, adopting alternatives is easier than a year ago. Signals include earnings calls, hiring, and partnerships.

In recent earnings calls, Amazon has highlighted persistent AI capacity constraints resulting in a strategic push to develop its Trainium chips. The company notes that AI demand is outpacing supply, opening up opportunities to develop its own capacity through Trainium chip supplies. But these supplies are still limited, forcing buyers to hedge delivery risk by lining up other non‑Nvidia capacity. For Anthropic, that likely meant adding Google TPUs alongside Trainium.

Hiring signals also show the trend gaining traction with other AI startups:

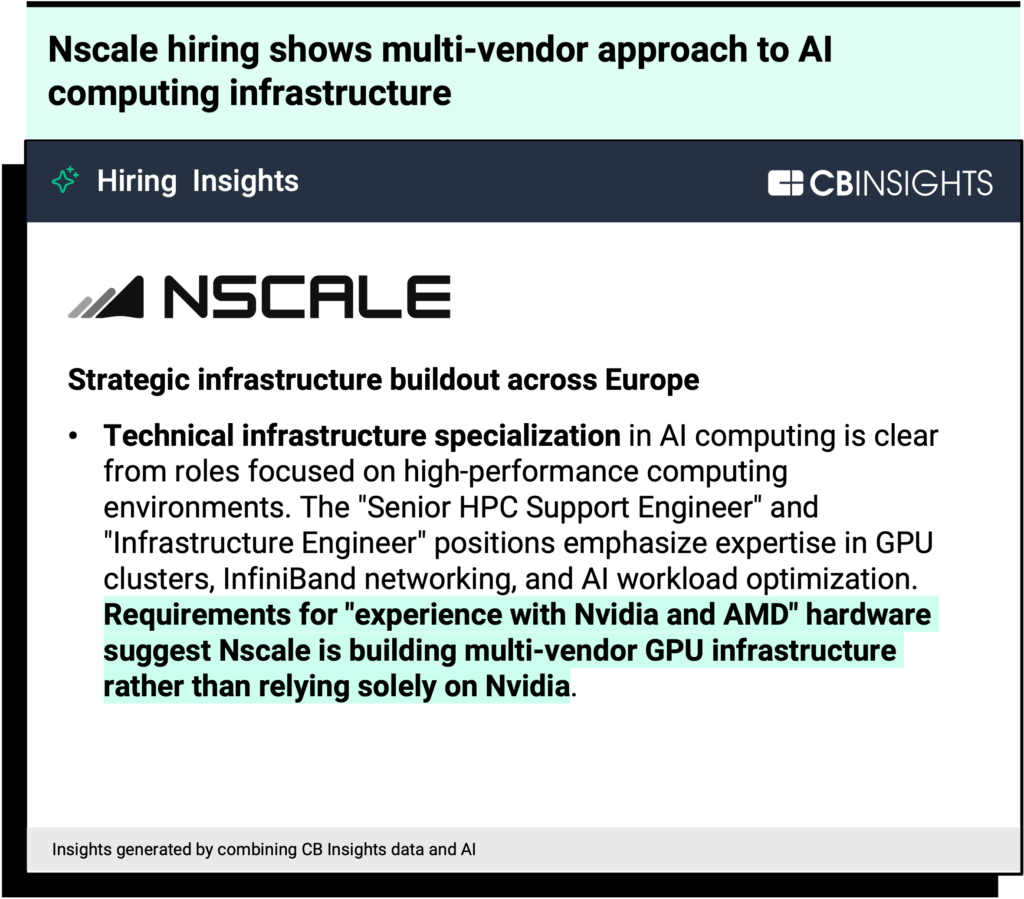

- Nscale, a data center hyperscaler, is hiring core infra roles that require “experience with Nvidia and AMD.” Nscale is positioning itself as a primary EU compute supplier, targeting data‑local workloads and a diversified supply chain that may shift some regional AI compute away from Nvidia.

- TensorWave, on the other hand, uses explicit AMD‑powered positioning (“building the Infrastructure for AMD‑Powered AI Computing”) and mentions that the company has deployed North America’s largest AMD GPU cluster. The company is projecting $100M in ARR by year’s end, signaling the market’s appetite for non-Nvidia compute.

- Groq, an infra provider developing its own learning processing unit (LPU) chip to compete with conventional Nvidia/AMD GPUs, is still recruiting to “support evolving Nvidia and AMD GPU architectures,” indicating cross‑vendor software support even as it promotes its own LPUs.

Beyond hiring and capacity, anchor deals are pushing diversification. OpenAI is lining up multi‑GW compute across Nvidia, AMD, and Broadcom, with Broadcom focused on custom, jointly designed chips. Oracle — a key OpenAI compute partner — plans roughly 50,000 next‑gen AMD accelerators starting in Q3’26 with expansion through at least 2027, reinforcing the move away from a single‑supplier model.

For the market overall, as reliance on Nvidia eases at the margin, compute supply spreads across Nvidia, AMD, and cloud custom silicon. That expands access, improves tokens‑per‑second and latency, and reduces lead‑time risk for startups. Where throughput and latency improve, usage rises and spend follows — especially for teams already maxed on capacity.

As compute comes from multiple vendors, expect a larger market for software that abstracts chip differences so teams can focus on product rather than implementation.

If you are a venture investor and want to submit data on your portfolio companies to allow us to better score you in the future, please reach out to researchanalyst@cbinsights.com.

RELATED RESOURCES FROM CB INSIGHTS:

- All 100 AI unicorns since ChatGPT launched

- State of Tech Exits H1’25

- The top angel investors in AI

- Are AI unicorns starting to move beyond hype?

- Book of Scouting Reports: Enterprise AI Agents

For information on reprint rights or other inquiries, please contact reprints@cbinsights.com.

If you aren’t already a client, sign up for a free trial to learn more about our platform.