Lasso Security

Founded Year

2023Stage

Seed | AliveTotal Raised

$14.5MLast Raised

$8.5M | 1 yr agoRevenue

$0000Mosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

+81 points in the past 30 days

About Lasso Security

Lasso Security addresses interactions with generative artificial intelligence (AI) and large language model technologies. The company offers a generative artificial intelligence (GenAI) security platform that monitors GenAI interactions, detects risks, and helps organizations manage their GenAI activities. Lasso Security's solutions aim to comply with AI regulations and assist with risk and governance, including data breach prevention and security policy implementation. It was founded in 2023 and is based in Tel Aviv, Israel.

Loading...

ESPs containing Lasso Security

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The AI security market provides specialized solutions designed to protect machine learning models, algorithms, and AI applications from AI-specific threats including adversarial attacks, data poisoning, model evasion, backdoor injections, prompt injection, and model theft. These vendors offer products spanning the entire AI lifecycle, including secure model development frameworks, runtime protecti…

Lasso Security named as Challenger among 15 other companies, including Chainguard, Snyk, and Skyflow.

Lasso Security's Products & Differentiators

Lasso for Employees

Protecting employees using chat bots

Loading...

Research containing Lasso Security

Get data-driven expert analysis from the CB Insights Intelligence Unit.

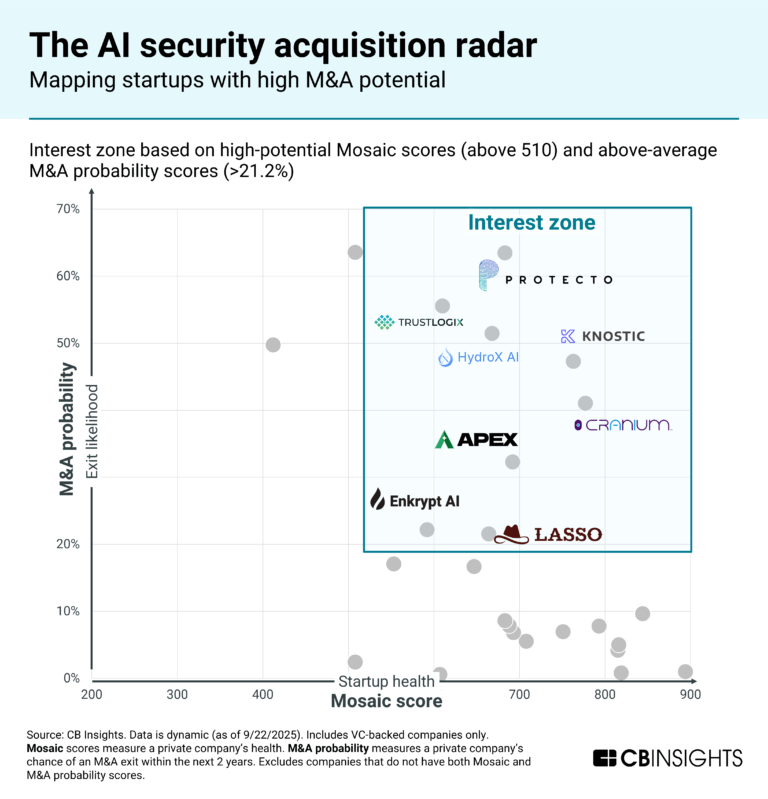

CB Insights Intelligence Analysts have mentioned Lasso Security in 5 CB Insights research briefs, most recently on Sep 25, 2025.

Sep 5, 2025 report

Book of Scouting Reports: The AI Agent Tech Stack

Mar 21, 2025 report

7 tech M&A predictions for 2025

Oct 8, 2024

3 trends to watch in the hot AI security market

Feb 5, 2024

6 cybersecurity markets gaining momentum in 2024Expert Collections containing Lasso Security

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Lasso Security is included in 4 Expert Collections, including Cybersecurity.

Cybersecurity

11,028 items

These companies protect organizations from digital threats.

Generative AI

2,951 items

Companies working on generative AI applications and infrastructure.

Artificial Intelligence (AI)

9,236 items

AI agents & copilots

1,772 items

Companies developing AI agents, assistants/copilots, and agentic infrastructure. Includes pure-play emerging agent startups as well as companies building agent offerings with varying levels of autonomy.

Latest Lasso Security News

Nov 3, 2025

Security experts warn that even seemingly private AI conversations can expose sensitive data, from personal information to corporate secrets, and offer tips to use ChatGPT, Claude, and other tools safely. Rani Ben Shaul 11:50, 03.11.25 When we ask ChatGPT a question or upload a file to Claude, it often feels like having a private, temporary conversation with a personal assistant. But behind that seemingly convenient and confidential interface lies a different reality: the information is transmitted to remote servers, may be stored, and in some cases, even used to train future models or end up in unintended hands. This gap between perception and reality creates a genuine problem. Many people use AI tools for work, uploading sensitive data or connecting them to email and calendars, without fully understanding the risks. And these risks are far from theoretical: from leaking corporate information to unintentionally running malicious code generated by AI, the dangers are real and require awareness. (Created using AI) This guide aims to help you use AI tools safely, enjoying their benefits without exposing yourself or your organization to unnecessary risks. To prepare it, we spoke with Lior Ziv, CTO and co-founder of Lasso Security, a company specializing in generative AI security. How can we prevent information leaks when using chatbots? The best protection, says Ziv, is to use a corporate license. “When an employer purchases an enterprise license for ChatGPT, for example, the information written in chats doesn’t reach OpenAI to train future models,” he explains. “Any data used for training becomes part of the model’s brain, it could later appear in someone else’s answers.” Enterprise licenses also allow companies to control permissions and store data in isolated environments. For individuals without a corporate plan, Ziv recommends disabling data use for training in the privacy settings of the chat application. If I turned off training, what should I still avoid sharing? The basic rule: don’t share personal or sensitive information such as ID numbers, credit card details, or home addresses. But Ziv notes that the decision about what to share ultimately depends on each person’s level of comfort. “I’d compare it to social networks, some people share more, others less,” he says. “If you’re uncomfortable with the amount of information being collected about you, limit what you share.” When using AI for work, however, the guidelines are clearer: follow your company’s policy. Yet, Ziv warns, the danger isn’t only what you give to the model, it’s also what you take from it. “If you use AI to generate code and then implement it directly into a product,” he explains, “you could inadvertently introduce malicious code into your organization’s systems.” His advice: review and cross-check any code generated by AI, ideally using another model or manual inspection. With so many AI tools available, how can we tell which are safe to use? Stick to tools from well-established, reputable companies, says Ziv. If you encounter an unfamiliar tool, research it: check how long it’s been available, what reviews say, and read its terms of service, especially the section on permissions. “If the permissions requested exceed what the product actually needs to do,” Ziv warns, “that’s a red flag.” And what about Chinese AI tools? Here, Ziv’s stance is unequivocal: “Don’t use them.” A test conducted by Lasso on DeepSeek’s model revealed critical failures in nearly every aspect of security, except when discussing the Chinese government. “Any data that goes into these models could reach the Chinese government,” Ziv cautions. If you still wish to experiment with such tools, he suggests doing so only in controlled environments like AWS, ensuring the data doesn’t reach external servers. Related articles:

Lasso Security Frequently Asked Questions (FAQ)

When was Lasso Security founded?

Lasso Security was founded in 2023.

Where is Lasso Security's headquarters?

Lasso Security's headquarters is located at 10 Beit Shamai, Tel Aviv.

What is Lasso Security's latest funding round?

Lasso Security's latest funding round is Seed.

How much did Lasso Security raise?

Lasso Security raised a total of $14.5M.

Who are the investors of Lasso Security?

Investors of Lasso Security include Plug and Play Silicon Valley summit, AWS & CrowdStrike Cybersecurity Accelerator, Entree Capital, Samsung NEXT, Ferocity Capital and 7 more.

Who are Lasso Security's competitors?

Competitors of Lasso Security include Prompt Security and 3 more.

What products does Lasso Security offer?

Lasso Security's products include Lasso for Employees and 3 more.

Loading...

Compare Lasso Security to Competitors

Bedrock Data focuses on data security, governance, and management within the data management and cybersecurity sectors. The company provides a platform for data security and governance processes, offering solutions for data discovery, classification, and lifecycle management. It serves various sectors including biotech, finance, healthcare, media & entertainment, retail, and technology, by providing a view and control over data assets. Bedrock Data was formerly known as Bedrock Security. It was founded in 2021 and is based in Menlo Park, California.

Acuvity provides artificial intelligence security and governance solutions, focusing on enabling safe adoption across various enterprises. The company offers a platform that allows employees to securely use AI applications, chatbots, and services while providing tools for full compliance, data protection, and risk management. Its solutions cater to a range of stakeholders including legal teams, security teams, and application builders. The company was founded in 2023 and is based in Sunnyvale, California.

WitnessAI focuses on artificial intelligence (AI) security and governance within the enterprise software industry. The company offers a platform that provides monitoring, policy enforcement, and protection for AI applications in business environments. WitnessAI primarily serves sectors that require robust AI security and governance solutions. It was founded in 2023 and is based in Mountain View, California.

Portal26 is a company that provides a platform within the AI security and governance sector. The company offers services such as AI visibility and governance, security measures for generative AI, and tools for managing AI risks, strategies, and policies. It aims to assist businesses in managing their AI investments and ensuring responsible AI adoption. Portal26 was formerly known as Titaniam. It was founded in 2019 and is based in San Jose, California.

Opsin provides security solutions for generative artificial intelligence within the cybersecurity domain. The company offers a security orchestration layer that protects data connections, reduces oversharing, and manages access control for GenAI applications. It serves enterprises seeking to adopt GenAI technologies securely and responsibly. The company was founded in 2024 and is based in San Jose, California.

Private AI focuses on privacy solutions in the technology sector, particularly in the identification, redaction, and replacement of personally identifiable information (PII) in various data formats. The company provides services such as PII detection, de-identification, synthetic PII generation, and tokenization and pseudonymization, aimed at protecting sensitive data and ensuring compliance with privacy regulations. Private AI serves sectors that require data privacy measures, including healthcare, banking, insurance, and organizations that use large language models. It was founded in 2019 and is based in Toronto, Canada.

Loading...